Where technology meets compassion.

OpenPredictor uses a carefully developed machine learning model to predict patient’s risk of post-operative complications, aiding medical professionals’ decision-making and helping hospitals optimise their pre-operative pathways for elective surgery.

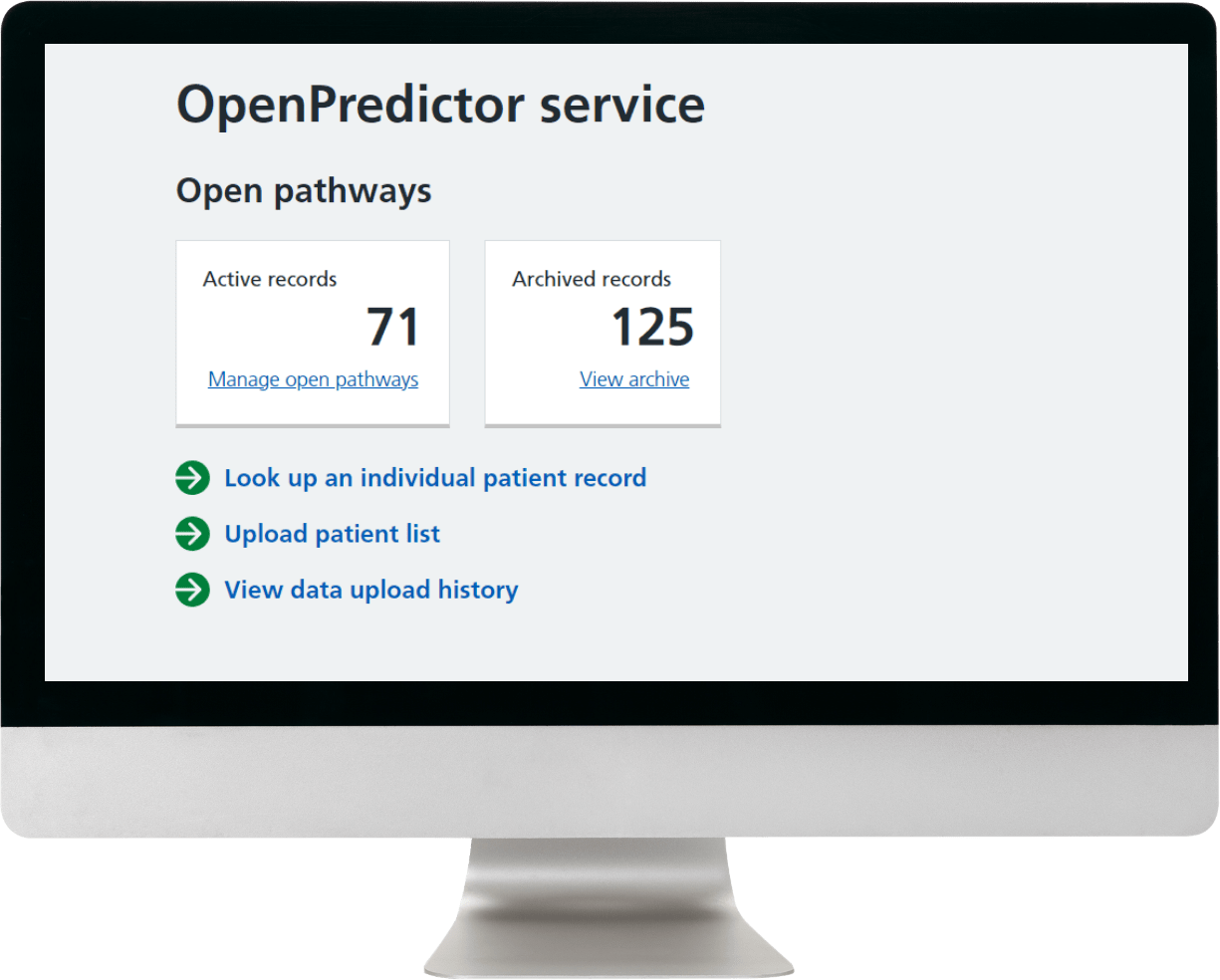

OpenPredictor is helping UK hospitals transform pre-operative assessment pathways — streamlining processes, reducing costs, and accelerating access to elective surgery by tackling waiting lists and surgical backlogs.

Features & Benefits

By accurately stratifying each patient’s surgical risk, OpenPredictor enables healthcare providers to streamline elective pre-operative pathways and identify early opportunities to optimise patient health.

When integrated into hospital systems, clinicians can seamlessly access patient-specific risk reports, supporting efficient, informed decision-making and enhancing the quality of pre-operative care.

At a click of a button, staff can review predicted risk levels across entire cohorts of waiting patients, supporting optimal patient allocation, increased use of elective hubs and ambulatory services, and improved productivity for pre-assessment teams.

By enhancing waiting list management, hospitals can make more effective use of available resources, better allocate patients to appropriate pre-surgical preparation pathways, and reduce last-minute cancellations.

OpenPredictor is proven to predict patient risk to an accuracy comparable to a trained clinician.

Registered as a medical device, OpenPredictor provides validated clinical support within the pre-operative assessment pathway.

Responsible Development

The responsibilities involved in deploying medical software are substantial. Throughout its development, OpenPredictor has been recognised as a model of good practice and safety in the application of machine learning.

Its development has been guided by the UK Government’s AI Regulatory Principles, ensuring a responsible, transparent, and clinically robust approach.

Safety, security and robustness:

Nothing developed is deployed in a medical setting until it has been clinically validated and proven safe for use. We fully understand the risks associated with applying AI in healthcare and take every precaution to ensure our technology meets the highest standards of safety and reliability.

Transparency and explainability:

We are committed to transparency in how OpenPredictor has been developed and how it operates. When working with healthcare providers, we collaborate closely to ensure they understand, at every stage, what we are doing and why.

OpenPredictor includes built-in explainability tools that allow us to trace and clearly explain the outcomes it generates. As the model’s performance can vary when working with different datasets and service environments, the ability to track and understand these changes enables us to keep our stakeholders fully informed and engaged.

Fairness:

We recognise that variations in data and data collection practices can introduce and propagate bias when used with machine learning. Our work focuses on identifying these variations and understanding where protected characteristics impact model performance, ensuring that we develop models that are fair across the populations we serve.

We continuously monitor OpenPredictor’s machine learning models for data shifts that could introduce bias over time. Where necessary, we adjust model training to help reduce healthcare-related inequities. We take a proactive approach to post-deployment surveillance, identifying and addressing emerging inequities to ensure our models continue to support fair and equitable healthcare outcomes.

Accountability and governance:

As with any medical device, robust oversight is essential to ensure that OpenPredictor is used safely and in ways that benefit patients. Accountability and outcome review measures are a central part of how we implement our software in clinical practice.

We work closely with healthcare providers to ensure OpenPredictor is deployed safely, supervised appropriately, and used strictly according to its intended purpose, as a clinical decision support tool, maintaining a clear and continuous chain of accountability.

Contestability and redress:

Patients are our most important stakeholders, and they have a right to understand how our software supports their care and to hold us to account. We work closely with healthcare providers to ensure that OpenPredictor’s role is always transparent, clearly understood, and open to scrutiny by all stakeholders.

We conduct periodic reviews of model performance and clinical outcomes to ensure that OpenPredictor continues to deliver meaningful benefit to healthcare providers and, ultimately, to patients.

Technical Details

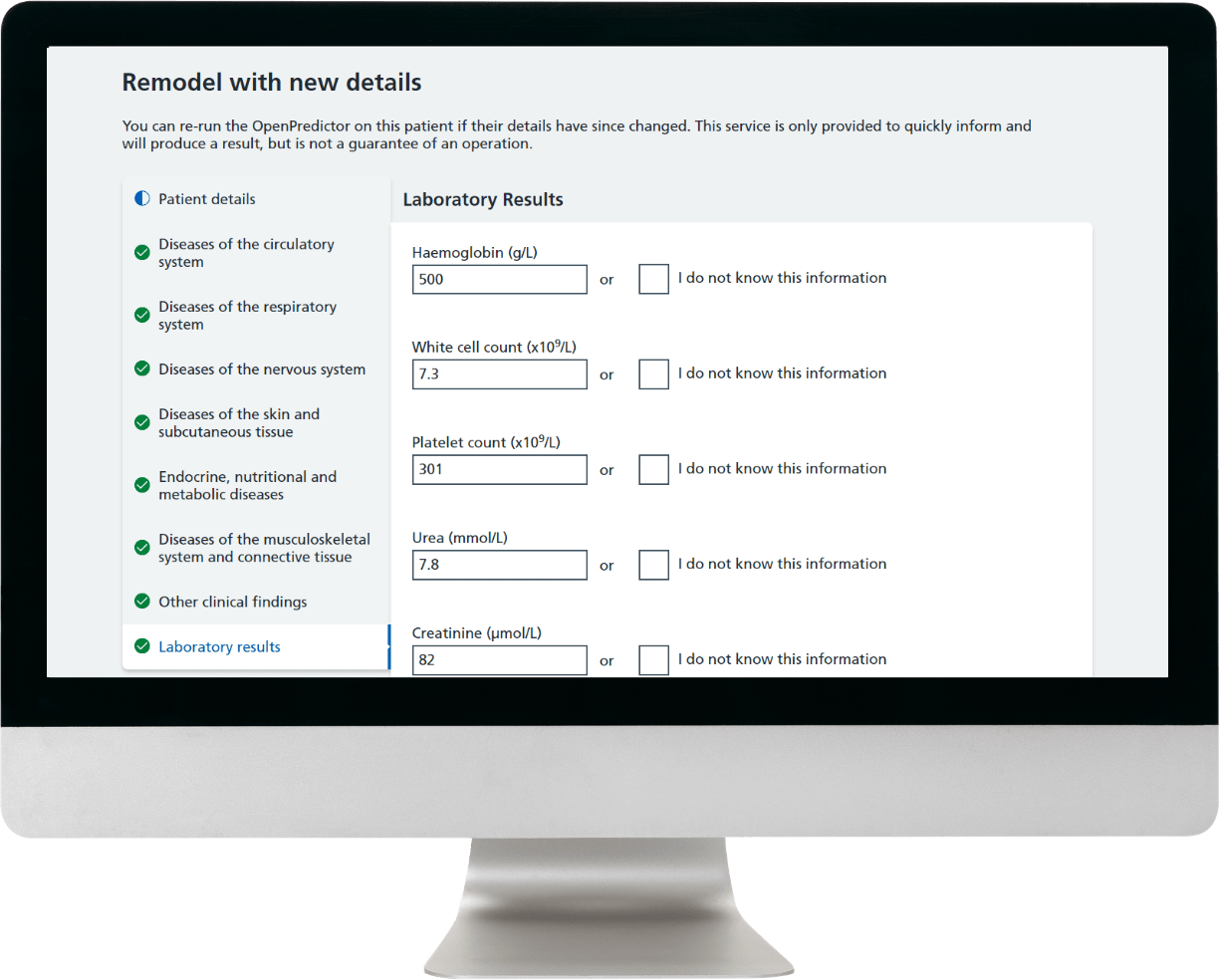

OpenPredictor is a machine learning tool trained on anonymised patient data to predict the risk of post-operative complications across diverse patient groups.

When patient data is processed through the software, OpenPredictor assesses the inputs against its trained model to generate a predicted risk level. This output is designed to support, not replace, clinical decision-making by trained healthcare professionals.

The software utilises Azure Machine Learning Studio (AML) for model training and incorporates Microsoft Responsible AI Tools to enhance transparency and explainability in its outputs.

OpenPredictor’s machine learning model is based on a polynomial logistic regression approach, refined through years of research and testing to address the specific complexities of patient medical data effectively.

The user interface is designed for ease of use and has been developed in alignment with the NHS Digital Service Manual and advisory toolkits to ensure accessibility and usability within healthcare settings.